![]()

TLDR: Using PowerShell scripting to check if the main file is there. If it is not there, create it, so when it runs again, it can append the newest log file to it. Scenario: This script is for those who wish to combine multiple files into 1 running being run from an Azure Runbook.

How this scenario came about?

I had a request where the client was running an Azure Automation Runbook against their local AD environment. The runbook – written in PowerShell 5.1 – would generate a report with the name of the report being the current day + the type of report it was, so something like locked out accounts or something like that and it would then push that file to a container in an Azure Storage Account. The client would run the runbook multiple times throughout the day and wanted a way to append the new data to the original data of the day without overwriting the old data. I looked and looked and didn’t find a way to do that with what I was working with which was: a runbook using PowerShell to join multiple files into 1 and put it in blob storage.

Of course, everything and anything is possible with PowerShell – about 99% of the time. And so, I figured it out and I wanted to share how I did it.

Prerequisites:

PowerShell runtime version: 5.1

Modules used: Az.Accounts and Az.Storage.

Virtual Machine: I created an on-prem VM with those modules installed on it with Window Server OS installed. I also joined that machine to my Azure tenant using Azure Arc. Then created a Hybrid Worker Group with that machine joined to it so when I run my runbook, I can select that Hybrid Worker Group to run it against.

For the sake of this walk through I created a file with some data using PowerShell to simulate a file that was already there and created like a client would have. I also created another file to simulate a ‘new’ log file generated that I would have to combine into 1. In my notes, I use the cmdlet write-output a lot, so I know exactly where I am at when something fails in my testing phase (‘like oh the last working line was XYZ, so this is where it failed blah blah blah’) but feel free to clean it up if you’d like.

Creating the Runbook: Authentication, Context and Variables

I like to always use a Managed Identity in my runbooks so that I what I use. The Identity has the contributor role over the subscription.

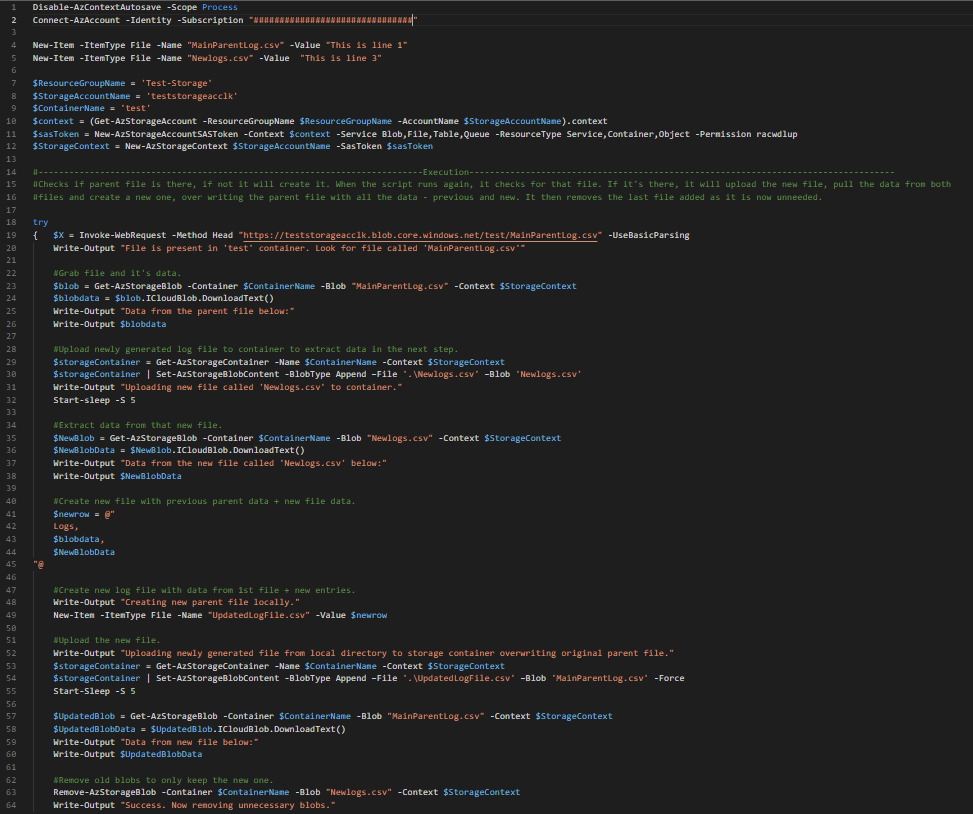

Disable-AzContextAutosave -Scope Process

Connect-AzAccount -Identity -Subscription "SubscriptionID here"Next, I define my environment variables:

First, I create 2 files for my testing. 1 file will be the main parent file called ‘MainParentLog.csv’ and the other one would be the test file I need to append to the parent called ‘Newlogs.csv’.

New-Item -ItemType File -Name "MainParentLog.csv" -Value "This is line 1"

New-Item -ItemType File -Name "Newlogs.csv" -Value "This is line 3"Then I truly define my other variables used later on by the Az.Storage cmdlets:

$ResourceGroupName = 'Test-Storage'

$StorageAccountName = 'teststorageacclk'

$ContainerName = 'test'Finally, I create the storage context or defining the environment I want to work in explicitly by generating a SAS token with an expiration time and passing that token to the New-AzStorageContext cmdlet.

$context = (Get-AzStorageAccount -ResourceGroupName $ResourceGroupName -AccountName $StorageAccountName).context

$sasToken = New-AzStorageAccountSASToken -Context $context -Service Blob,File,Table,Queue -ResourceType Service,Container,Object -Permission racwdlup

$StorageContext = New-AzStorageContext $StorageAccountName -SasToken $sasTokenCreating the Runbook: Try/Catch Logic: Try block

Here’s what I want my runbook to do and keep in mind that hypothetically I have a new file being generated for every day so imagine something called 11-27-22-ADReport.csv and this changes every day where the date in the name gets updated, ok? Ok. So, on a new day, I want my runbook to first check if that day’s file is there in the container. If it’s there, then grab the data from the new file and append it to the ‘parent’ file. However, if it is not there, then create it first then continue.

In the try block, I first check to see if the file for the day is there by using the Blob Service REST API . .

try

{ $X = Invoke-WebRequest -Method Head "https://teststorageacclk.blob.core.windows.net/test/MainParentLog.csv" -UseBasicParsing

Write-Output "File is present in 'test' container. Look for file called 'MainParentLog.csv'"If the file is there, cool, let’s go ahead and grab that data stored in it and store that data in a variable called $blobdata. Like this:

#Grab parent file and it's data.

$blob = Get-AzStorageBlob -Container $ContainerName -Blob "MainParentLog.csv" -Context $StorageContext

$blobdata = $blob.ICloudBlob.DownloadText()

Write-Output "Data from the parent file below:"

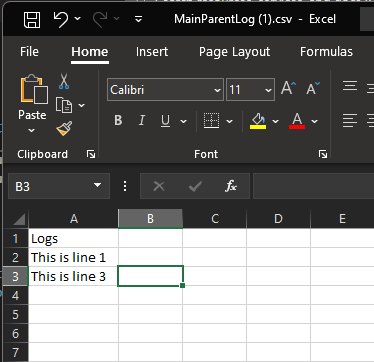

Write-Output $blobdataThe value of that file of course is “This is line 1”. Keep that in mind.

Now the next part could probably be done better, but hey it works so let’s continue on. Next, I grab that new file and upload it to the container and add some time delay to it.

#Upload newly generated log file to container to extract data in the next step.

$storageContainer = Get-AzStorageContainer -Name $ContainerName -Context $StorageContext

$storageContainer | Set-AzStorageBlobContent -BlobType Append –File '.\Newlogs.csv' –Blob 'Newlogs.csv'

Write-Output "Uploading new file called 'Newlogs.csv' to container."

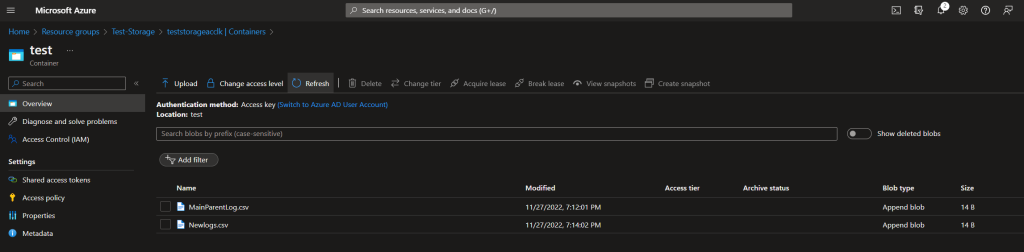

Start-sleep -S 5You can quickly see the new file displayed in the portal, but it later gets removed…which will get explained later on:

Now that the file is there – with new data – lets also extract that data and store it in another variable called $NewBlobData:

#Extract data from that new file.

$NewBlob = Get-AzStorageBlob -Container $ContainerName -Blob "Newlogs.csv" -Context $StorageContext

$NewBlobData = $NewBlob.ICloudBlob.DownloadText()

Write-Output "Data from the new file called 'Newlogs.csv' below:"

Write-Output $NewBlobDataNow we take whatever data is in the parent file and add or append the new data to it. We do this by creating another csv file with a column header called ‘Logs’ and then add those 2 pieces of data – previous and new -below it and store it in the $newrow variable:

#Create new file with previous parent data + new file data.

$newrow = @"

Logs,

$blobdata,

$NewBlobData

"@

#Create new log file with data from 1st file + new entries.

Write-Output "Creating new parent file locally."

New-Item -ItemType File -Name "UpdatedLogFile.csv" -Value $newrowNow we upload that new file to the container.

#Upload the new file.

Write-Output "Uploading newly generated file from local directory to storage container overwriting original parent file."

$storageContainer = Get-AzStorageContainer -Name $ContainerName -Context $StorageContext

$storageContainer | Set-AzStorageBlobContent -BlobType Append –File '.\UpdatedLogFile.csv' –Blob 'MainParentLog.csv' -Force

Start-Sleep -S 5Now, you can remove this next step, but in my troubleshooting piece, I wanted to see if everything was the way I wanted it to be, so I pull the values of that new file just cause.

$UpdatedBlob = Get-AzStorageBlob -Container $ContainerName -Blob "MainParentLog.csv" -Context $StorageContext

$UpdatedBlobData = $UpdatedBlob.ICloudBlob.DownloadText()

Write-Output "Data from new file below:"

Write-Output $UpdatedBlobDataNow it’s time for the cleanup. I really only want 1 blob with all the logs. Not 1 container with hundreds or thousands of log blobs. So, I remove everything that’s not the MainParentLog.csv blob. Again, you can do this multiple ways, but I find this is the quick and dirty way of doing it:

#Remove old blobs to only keep the new one.

Remove-AzStorageBlob -Container $ContainerName -Blob "Newlogs.csv" -Context $StorageContext

Write-Output "Success. Now removing unnecessary blobs."Creating the Runbook: Try/Catch Logic: Catch block

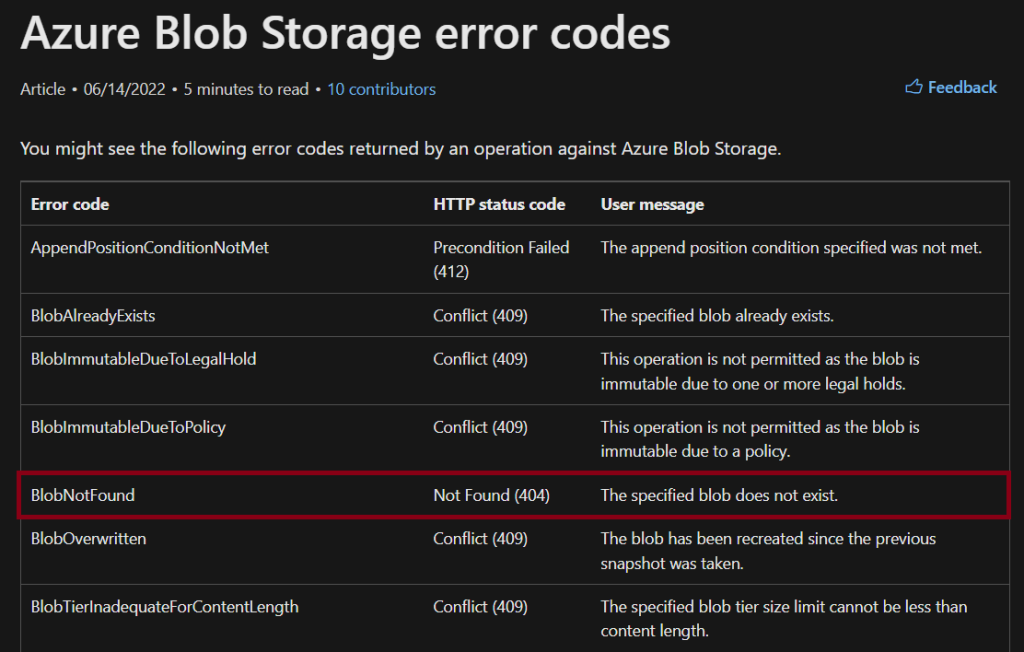

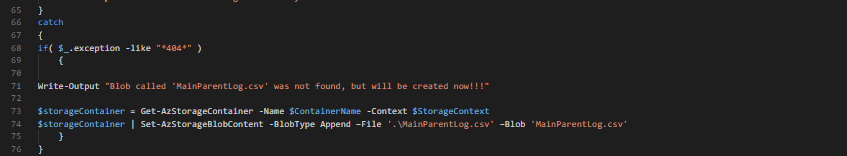

With this script, when it checks to see if the parent file is found in the storage container, if it is not there, the API request will return a 404 error which means the blob is not found. For a list of blob storage error codes, you can check the Microsoft doc here.

So, since the main parent blob/file isn’t there, we need to create it with the first data of the day.

catch

{

if( $_.exception -like "*404*" )

{

Write-Output "Blob called 'MainParentLog.csv' was not found,but will be created now!!!"

$storageContainer = Get-AzStorageContainer -Name $ContainerName -Context $StorageContext

$storageContainer | Set-AzStorageBlobContent -BlobType Append –File '.\MainParentLog.csv' –Blob 'MainParentLog.csv'

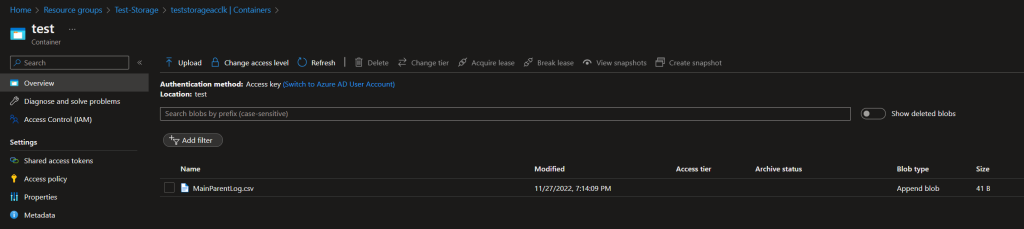

}Now next time this runbook runs, it will check first to see if the file is there, and it is and it will proceed with adding the new stuff to it. For example, in this walkthrough instead of 2 files, I now only have 1 with the data of both:

Here is the full script:

Conclusion:

PowerShell allows you to do anything you want, almost anything at least. Sometimes, you can do it, but it is a matter of should you do it? Why? Sometimes PowerShell may not be the best tool to use for your scenario or the tool already exists and it’s a different technology.

I learned a lot from creating this script. I learned how to manipulate the data how I want it. I learned more on azure storage cmdlets and what they want in order for them to work. And I also learned more about the try/catch logic!

If you can see a way of making this better, or if the tool already exists, please share!

Thank you

No comments! Be the first commenter?